openvino的使用

Date: 2019/08/19 Categories: 工作 Tags: openvino DeepLearningInference

Openvino介绍

intel出品的深度神经网络加速工具, 包括model optimizer和inference server两部分,

model optimizer用于将模型转换为inference server可以部署的格式,

支持多种源格式包括tensorflow, onnx, mxnet等, 但支持程度不一,

比如我们实验时发现wordd_lookup层就不支持.

转换过程

如果模型中包括不支持的层, 需要先将提取模型中的子图 接下来, 我们使用的keras, 所以需要下面几步:

第一步: 将模型转换为frozen graph

from tensorflow.python.framework import graph_io

def freeze_graph(graph, session, output, save_pb_dir='.', save_pb_name='frozen_model.pb', save_pb_as_text=False):

with graph.as_default():

graphdef_inf = tf.graph_util.remove_training_nodes(graph.as_graph_def())

graphdef_frozen = tf.graph_util.convert_variables_to_constants(session, graphdef_inf, output)

graph_io.write_graph(graphdef_frozen, save_pb_dir, save_pb_name, as_text=save_pb_as_text)

return graphdef_frozen

K.set_learning_phase(0)

session = K.get_session()

INPUT_NODE = [t.op.name for t in model.inputs]

OUTPUT_NODE = [t.op.name for t in model.outputs]

frozen_graph = freeze_graph(session.graph, session, [out.op.name for out in model.outputs], save_pb_dir='esim_frozen')第二步: 使用openvino model optimizer转换frozen graph pb到ir

这里的--input和--input_shape是可选的, 这里指定主要是因为openvino不支持dynamic sequence length, 这里指定的batch size为1, 而sequence length为15

/opt/intel/openvino_2019.2.242/deployment_tools/model_optimizer/mo_tf.py --input_model frozen_model.pb --output_dir out --data_type FP32 --disable_nhwc_to_nchw --batch 1 --input a_2,b_2,pos_a_2,pos_b_2 --input_shape '(1,15),(1,15),(1,15),(1,15)'如果是使用docker转换bert模型的话, 命令如下

docker run -v $PWD:$PWD -w $PWD -u `id -u` $IMAGE mo_tf.py --input_model frozen_model.pb --output_dir out --data_type FP32 --disable_nhwc_to_nchw --input input_3,input_4 --input_shape '(1,48),(1,48)'最终转换得到的是三个文件frozen_model.bin, frozen_model.mapping, frozen_model.xml

第三步: 使用openvino进行推理

openvino本身提供了一些例子,在目录intel/openvino/inference_engine/samples/python_samples下, 我们这里介绍一下如何使用openvino转换的bert模型

性能提升

VNNI > AVX512 > AVX2

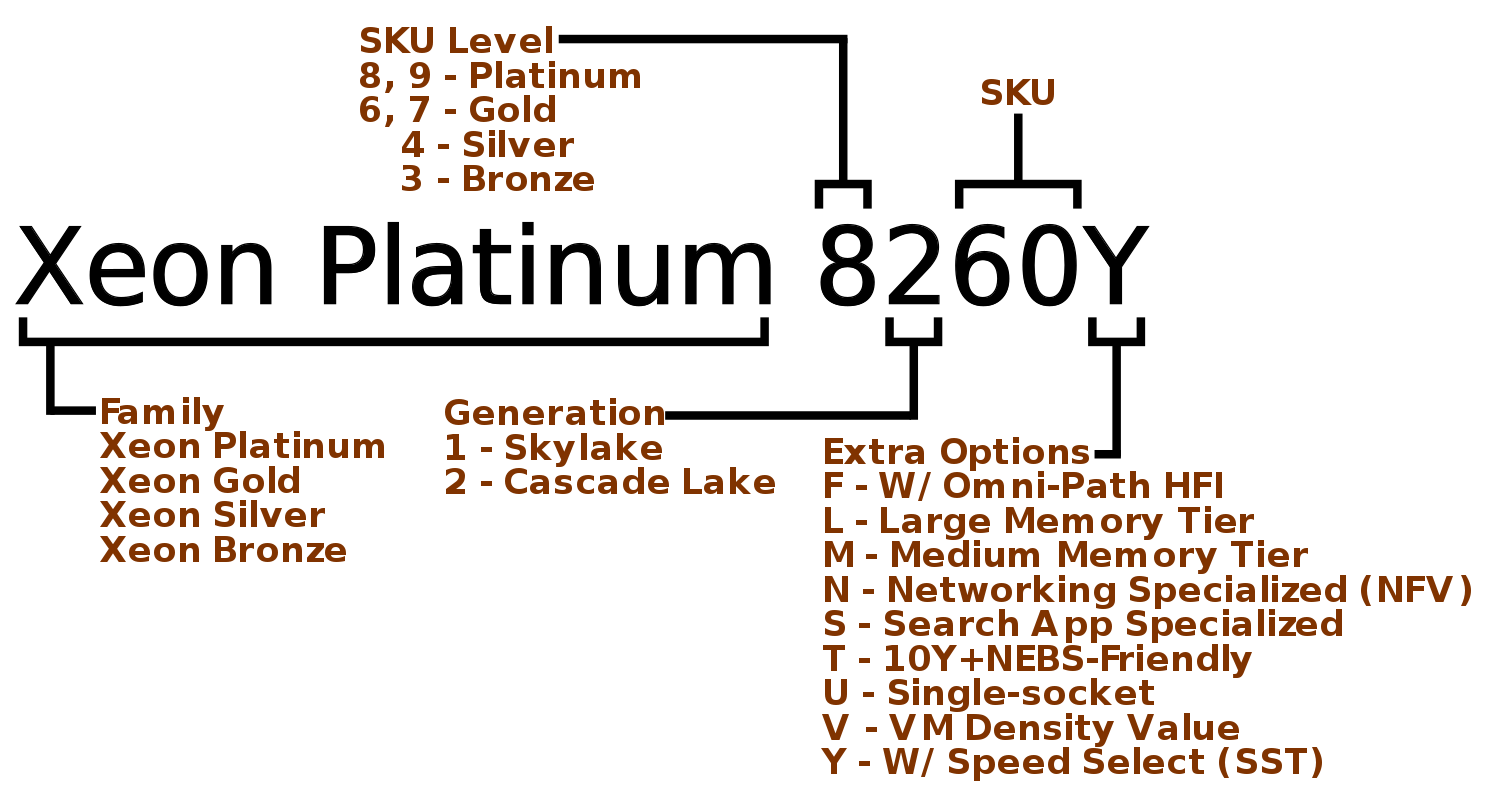

2nd gen Xeon Gold(Cascade Lake)开始支持VNNI

目前我们的大多数服务器还是老的Xeron E5 26xx, 只能用avx2, 最好的服务器我见过的是 Xeon Gold 6133, 也就是1st Gen Xero Gold, 支持AVX512, 不支持VNNI

具体的性能需要使用实际的机器测量

CPU型号判断可以参考Cascade Lake - Microarchitectures - Intel - WikiChip

根据mkl-dnn的讨论, 目前fp16不能在cpu上提供任何性能加速, 而INT8的推断需要avx512, 也就是skylake.

itial BF16 appears in v0.20 (you can check the RC-release here) and in master (that will soon become the v1.0). To test BF16 you need processor with Intel AVX512 instruction set – at least Intel Xeon Scalable aka Skylake server. On these platforms the library would simulate behavior on actual BF16-capable HW which is still not available. Do not expect any performance improvements from BF16 on the current hardware, the only purpose is functional emulation (expect it to be 2x slower than f32).

F16 works on GPU only, which support is available in master.

To make INT8 work you need Intel AVX512 instruction set. Again Skylake server and newer. Cascade lake will lead to better performance. You can also check OpenVino toolkit. This is a dedicate SW for inference on Intel HW. They have a fork of Intel MKL-DNN that has extended support of INT8 on AVX2 and even SSE4.2. So you can use it on most of the systems.

Intel MKL-DNN optimizes Int8 computations for AVX512+ systems. On AVX2 and below we have reference or gemm-based implementations (which would perform more or less fine if you build the library with Intel MKL support). But anyways, with the current level of Int8 support on AVX2 and below systems it would be more correct to say that we don’t support it.

根据mkl-dnn的另一个issue, avx2机器也就是Xeon E5之流使用int8替换fp32后,全连接层的加速比大约是1.5x.

So the INT8 FC is ~1.4x faster than f32 counterpart. That is exactly what is expected on Skylake.